To put it in simpler words a Robots Text file or Robots.txt file is a set of instructions for search engine bots. This Robots.txt file is added to the source files of most websites and is meant mostly to manage the actions of good bots, like web crawlers or search engines crawlers.

A robots.txt file helps search engine crawlers to check which pages or files the crawler can or can’t request from your site. The purpose of it is mainly to avoid overloading your site with requests and it is not a mechanism for keeping a web page away from Google. If you wish to keep a webpage out of Google use no index directives or password-protect your page.

In other words, a robot.txt file makes sure that the web crawlers don’t overtax the web server hosting the website or index pages which are not for public view.

How does a robot.txt file work?

A file just like the robots.txt is a text file with no HTML markup code added. The robots.txt file is usually hosted on the webserver just like any other file on your website. For any website, the robot.txt file can be seen by typing the full URL for the homepage and then adding /robots.txt.

This file is not connected to anywhere else on your website, so users don’t come across it, but most web crawlers will check for this file first before crawling through your website.

Robots.txt file gives instructions for bots without enforcing them. A good bot like web crawler or news feed bot will attempt to visit the robots.txt file first before entering any other pages on a domain and will follow the given instructions. On the other hand, a bad bot will ignore or will process it in order to find the web pages that are prohibited.

What is robots.txt used for?

These types of files are mainly used to manage crawler traffic to your site and to keep a page off Google, depending on the file type:

- Web Page: robots.txt will be used to control crawling traffic if you think the server is overwhelmed by requests from Google’s crawler or in order to avoid crawling with similar pages to your own site.

- Media File: robots.txt can control crawl traffic but also prevent images, videos, or audio files from showing up in Google search results.

- Resource File: robots.txt can block resource files which are unimportant images, scripts, or style files if you contemplate whether pages loaded without these resources will not be considerably influenced by the loss. But if Google’s crawlers don’t understand the page you should not block them because google won’t do a good job of analyzing pages that depend on such sources.

What protocols are used in a robots.txt file?

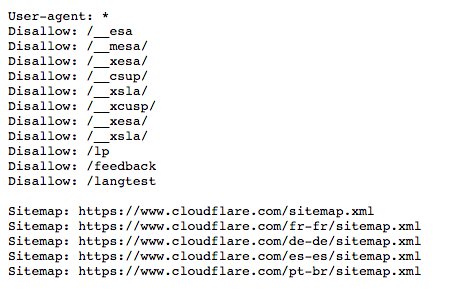

Robots.txt files use different protocols. The main one is called the Robots Exclusion Protocol, which is used as a way to tell bots which pages and resources have to be avoided. Instructions formatted for this type of protocol are added in the robots.txt file.

The other one is used for robots.txt files and is the Sitemaps protocol. This can be taken as a robot’s inclusion protocol. It can help by making sure that a crawler bot won’t miss an important page.

What are the limitations of robots.txt?

Before you start with the creation or edit of robots.txt, you have to check what are the limits of this URL blocking method.

- Robots.txt may not be backed up by all search engines

- Various crawlers read syntax differently

- A robot page can be indexed if connected to other sites